Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Refresh

Sora was the big bad beast of an announcement for OpenAI, but there are several more days ahead. What else could they have planned?

We’ll continue to keep an eye on OpenAI this week. If you’re curious, check back in with Tom’s Guide to see what’s coming.

For now, here’s what we think could be announced tomorrow:

Sora is here, at least in the United States, and assuming you can get through the heavy traffic.

There is a lot going on in with the AI video generation tool including story boarding, impressive capabilities and integration with ChatGPT if you are a paid subscriber.

For more details and to learn how to access Sora, check out our full breakdown of what’s new and what Sora is capable of.

We’ve tried logging into Sora and have had trouble getting in either locally or via an VPN.

Users on Reddit and other social platforms are also reporting an inability to sign up for the platform. One user said that they couldn’t even generate videos because the queue is full.

Additionally, those of you in Europe and the UK won’t be able to access the platform (without a VPN) because of local content laws which requires extra compliance measures. OpenAI is working with the governments there but we don’t know when Sora will actually be available in those regions.

Sora is here for Plus and Pro users at no additional cost! Pushing the boundaries of visual generation will require breakthroughs both in ML and HCI. Really proud to have worked on this brand new product with @billpeeb @rohanjamin @cmikeh2 and the rest of the Sora team!… pic.twitter.com/OjZMDDc7maDecember 9, 2024

OpenAI VP of Research Aditya Ramesh has shared a video made using Sora that shows jellyfish in the sky. He wrote on X that: “Pushing the boundaries of visual generation will require breakthroughs both in ML and HCI.”

Bill Peebles, Sora lead for OpenAI shared a cute, looped image of “superpup” with a city in front of him. The loop feature looks like an interesting one to try.

The Sora feed is available to view at Sora.com, even if you are in the EU or UK. If you are anywhere else in the world you can also start creating videos.

Users will get 50 generations per month if they have a ChatGPT Plus, unlimited slow generations with ChatGPT Pro or 500 per month normal generations. Plus costs $20 a month and Pro is $200 per month but does include unlimited o1 and Advanced Voice.

Sora is a standalone product but tied to a ChatGPT subscription. Any ChatGPT account can view the feed — with videos made by others — but only paid accounts can make videos.

It will be available everywhere but the UK and EU from today, but those places will get access “at some point”. Altman says they are “working very hard to bring it to those places”.

“Sora is a tool that lets you try multiple ideas at once and try things not possible before. It is an extension of the creator behind it,” the company says, adding that you won’t be able to just make a full movie at the click of the button.

Altman says this is early and will get a lot, lot better.

There was some speculation that Sora would be incorporated into ChatGPT but OpenAI confirmed it is a completely standalone product found at Sora.com. However, if you have a ChatGPT Plus or Pro subscription access to Sora will be included.

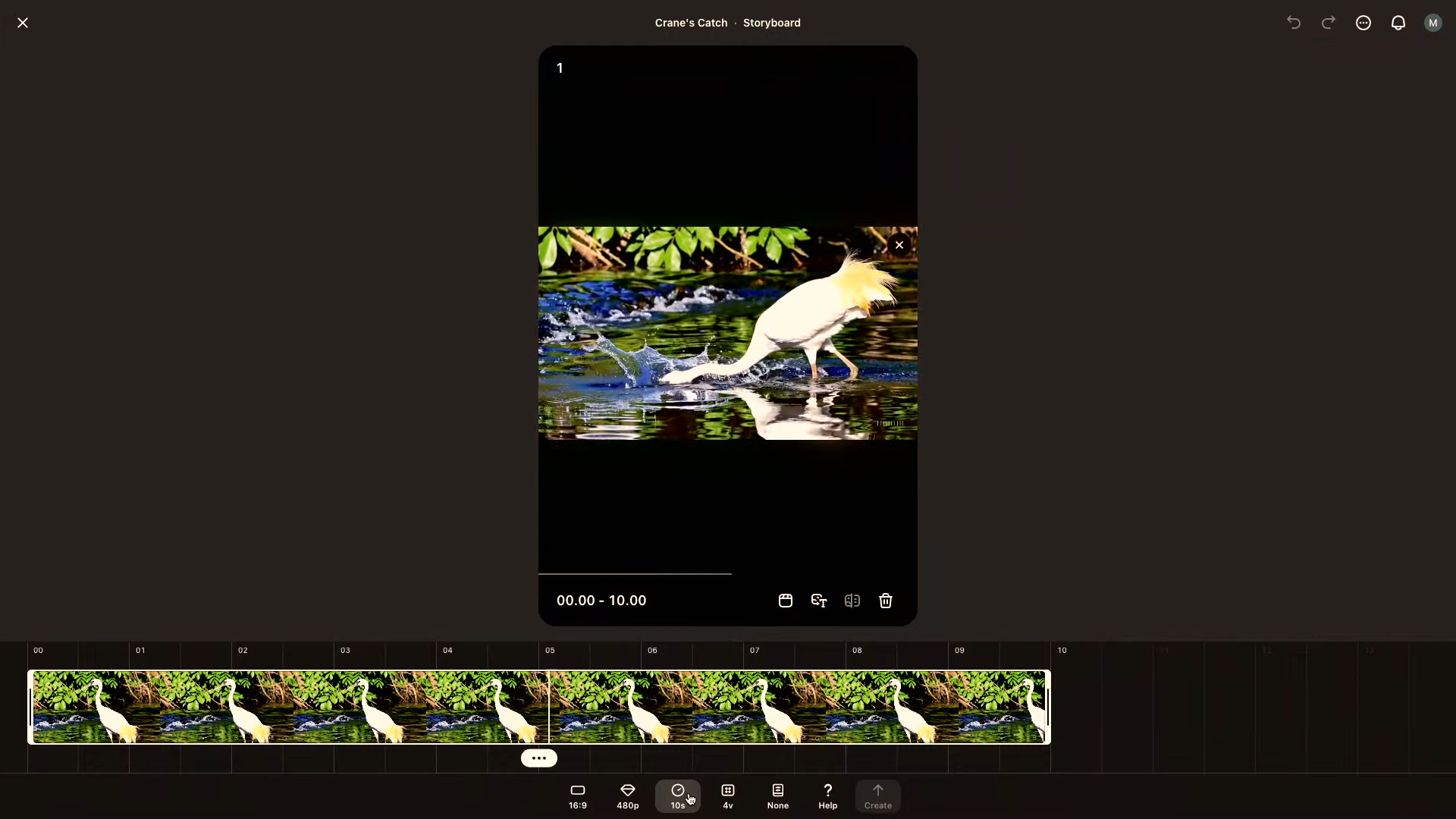

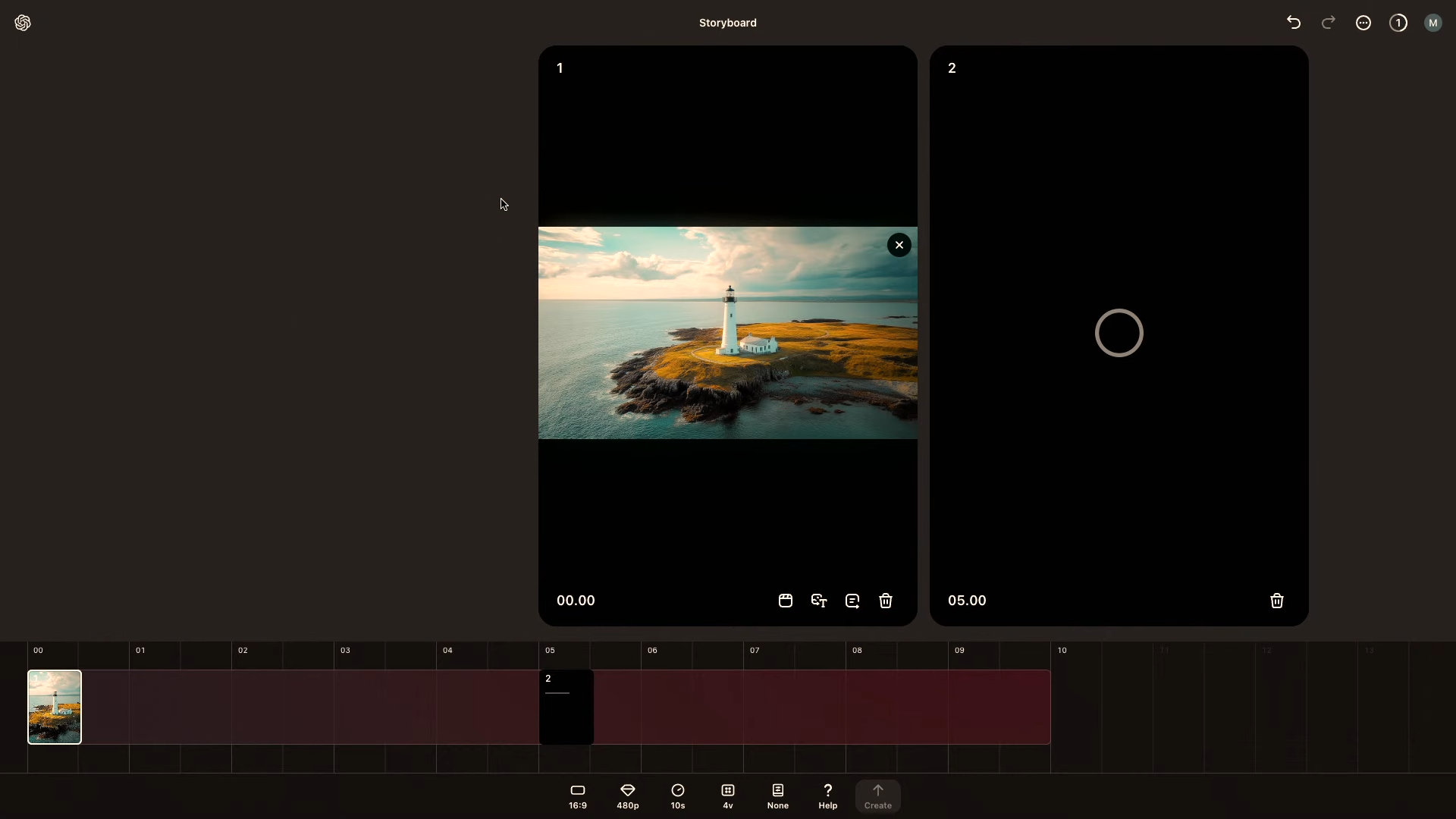

It has a similar interface to Midjourney with menu down the side, but with a prompt box that floats over the top. The biggest new feature is the Storyboard view which looks more like a traditional video editing platform.

Other key features include the ability to remix in a video. In one example they turned wooly mammoths into robots. Another is blending one video into another. You can also manipulate different elements within a video and share them with others.

You can also remix other videos shared by others in the community feed.

OpenAI’s Sora includes a new ‘Storyboard’ view that lets you generate videos and place them on a timeline. Sora can then fill in the gaps from the start of the timeline to where you place the image or prompt.

If you place an image at the start Sora will create a prompt at the appropriate point in the timeline. So if you add it 10 seconds in it will generate the motion from your image to the prompt. This allows you to fully customize the flow of the video by adding keyframes to the timeline view.

When the video is generated you get more control over how it displays. Remix is one such feature that lets you describe changes to the video — such as swapping mammoths for robots — and it will handle the change, leaving everything else the same.

They were able to demonstrate the changes in real-time, suggesting it takes less than a minute to generate lower-resolution videos — roughly inline with Runway timing.

“Half of hte story of Sora is taking a video, editing it and building on top of it,” OpenAI explained during the live.

During a demo of Sora during the livestream OpenAI was able to generate a video of wooly mammoths, revealing the system of creation including a Haiper like toolbar.

The video took a few minutes to create and included options like resolution (480p, 720p and 1080p) as well as duration of the clip starting at 10 seconds.

Videos seem to be as good as we expected from previews and you only need a ChatGPT account to sign up for Sora.com. It is available today, so I’d love to see what you create.

Sam Altman is back to announce Sora and with it we get our first ‘official’ look at the new video model. So far, so good. It looks impressive and Altman explains that “video is important” on the path to artificial general intelligence.

The company confirmed Sora would be launching today from sora.com. It is a “completely new product experience” but would be covered by the cost of ChatGPT Plus.

Today’s announcement is Sora Turbo which runs faster than the previous version. It includes a Midjourney-style community view with videos generated by others.

Day 3 of OpenAI’s 12 Days of OpenAI announcement event is live on YouTube. By this point we pretty much know what is going to be announced, but we don’t know how or specifics.

Sora is going to be the most visual of all the announcements coming out of these 12 days, and so I’m hoping for something a little more than a roundtable — but who knows.

We will have all the details and information as it happens, and with follow up stories over the coming days.

I have been waiting impatiently for the best part of a year for OpenAI to release its AI video generator Sora to the general public. Every new video drop or project added to my impatience.

Now, it looks like we’re a mere hour away from the launch of what looks to be a generation ahead of any other commercial or open-source model currently on the market.

What we don’t know is how much it will cost, how you will access it or where it will be available. There are some possible leaks pointing to this on X including one from Kol Tregaskes showing some screenshots that ‘may’ be from a Sora site.

This includes requiring you to commit to not using it for certain types of content, ensuring you have rights to any images you upload and not creating depictions of people without permission. The UI seems very similar to Haiper with elements of Midjourney and appears to be able to do landscape and portrait. It will run independently of ChatGPT.

We will know for sure after 1pm ET.

Sora, OpenAI’s state-of-the-art AI video generator IS launching today, at least according to Marques Brownlee. Speeding to X and YouTube with a post on the new model, the tech reviewer shared video footage generated with Sora two hours before the live stream.

OpenAI is scheduled to go live at 1pm ET with a fresh announcement as part of its 12 Days of OpenAI event. So far we’ve had the full o1 model and a research update. It was strongly hinted that today’s announcement would be the long-awaited Sora.

If Brownlee’s video is real then we’re in for something special from the OpenAI flagship, offering degrees of realism barely even dreamt of by other models. In his X thread, Brownlee talks about it being particularly good at landscapes and drone shots but struggles with text and physics.

We’ll find out for sure just how good it is at 1pm. I wonder if Sam Altman will be there for this one, or even if they’ll move beyond the simple roundtable format.

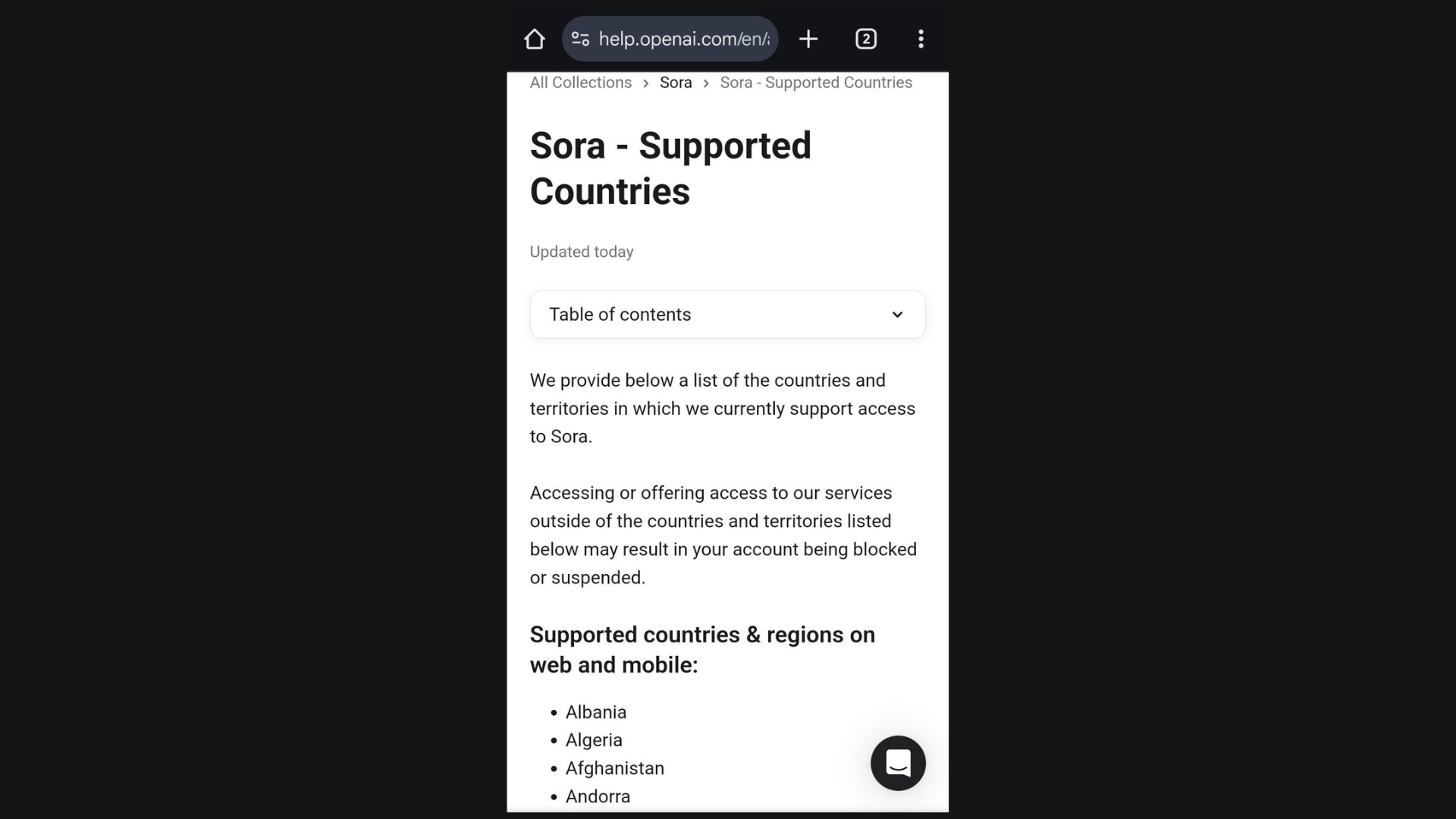

While we’re all at the edge of our seat waiting for a Sora announcement, one user on X noticed that the OpenAI page listed Sora availability by country. However, it looks like that page was pulled. Whoever let the cat out of the bag, gave us all hope that something about Sora will be announced soon.

This mysterious page listing raises more questions than answers. Does the inclusion of availability by country suggest that Sora is poised for a global rollout sooner than we think? The post originally stated that it would not be available in the EU and UK. Could this imply features tailored for specific regions or languages? OpenAI has been tight-lipped about the details, but the leak has sparked rampant discussions on X and other platforms, with users theorizing everything from enhanced language capabilities to integration with existing OpenAI tools like Copilot. The abrupt removal of the page has only amplified the excitement, with fans now convinced that an official announcement could be imminent.

For now, all we can do is wait and speculate. OpenAI has a history of building anticipation for its projects, and the Sora leak has only added to the intrigue.

OpenAI is releasing something new every weekday until December 20 and so far the focus has been on the advanced research and coding side of artificial intelligence.

CEO Sam Altman says there will be something for everyone, with some big and some “stocking stuffer” style announcements. With day one giving us the full version of o1 and and day two offering up a way for scientists to fine-tune o1 — that is bearing fruit.

All roads point to Sora getting a release today. This includes a leak over the weekend showing a version 2 of OpenAI’s AI video model, and today the documentation was updated to show which countries Sora will be available in — everywhere but EU and UK.

Whatever the AI lab announces, we will have full coverage and if its something we can try — we’ll follow up with a how to guide and a hands on with the new toy.