Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

Physical Address

304 North Cardinal St.

Dorchester Center, MA 02124

This week, our Research Roundup covers new advanced LLMs, with technical reports on DeepSeek-V3 and the Qwen2.5 family of models, a modern update on the original BERT encoder model, and improving AI model reasoning by directly iterating on latent space:

DeepSeek-V3 Technical Report

Qwen2.5 Technical Report

ModernBERT: A Fast, Memory Efficient, and Long Context Bidirectional Encoder for Finetuning and Inference

Coconut: Training LLMs to Reason in a Continuous Latent Space

We now have a 100% open-source LLM that beats GPT 4o and Claude 3.5 Sonnet on many benchmarks, the DeepSeek-V3 model, a powerful Mixture-of-Experts (MoE) language model with 671B total parameters and 37B activated for each token. The DeepSeek team shared the model on HuggingFace with a generously open MIT license and also provided details on the model in their “DeepSeek-V3 Technical Report.”

The DeepSeek MoE architecture is a fine-grained MoE, with 1 shared expert and 256 routed experts, 8 active routed experts for each token. The architecture also includes Multi-head Latent Attention with low-rank joint compression for attention keys and values. It also has multi-token prediction, useful for speculative decoding and better usage of training data.

Deepseek-V3 was trained on 14.8 trillion training tokens, and used 2788K H800 GPU hours, which cost just $5.6M. This cost-efficient training of DeepSeek-V3 is due to the fine-grained MoE architecture, the use of FP8 mixed precision in training, and adjusting and extending context length during training.

They overcame communication bottlenecks in the training of the large MoE model through algorithm-framework-hardware co-design, achieving efficient use of computation in training. A two-stage context length expansion expanded context first from 4k tokens to 32k tokens and then to 128k tokens. The combined result of these optimizations is extremely high training efficiency, with a 10-fold cost reduction compared to Llama, Claude, and similar AI models.

Post-training used SFT and RL to align with human preferences and to distill DeepSeek-R1 reasoning capabilities, their reasoning model, which boosted its reasoning and math capabilities. Multi-Token Prediction (MTP) can be used to enhance model performance and enable speculative decoding for inference acceleration.

DeepSeek-V3 has extremely impressive benchmarks for an MoE LLM with only 37B active parameters: 88.5 on MMLU, 59.1 on GPQA, 75.9 on MMLU-Pro, 90.2 on MATH, 51.6 on CodeForces, and more. DeepSeek-V3 is the strongest open-source model currently available and achieves performance comparable to leading closed-source models like GPT-4o and Claude-3.5-Sonnet.

The Qwen 2.5 family of LLMs was released in September, and since then the Alibaba Qwen team have released helpful updates, including the Qwen-2.5 coder 32B model, expanded 1 million token context support, and Qwen QwQ, a reasoning AI model based on Qwen 32B. This week, they released QvQ, a vision reasoning model built upon Qwen2-VL-72B. This flurry of releases has established Qwen as a leading LLM family, with some of the best AI models for coding, reasoning and for local use.

The Alibaba Qwen team published the Qwen2.5 Technical Report to give further details on this open-weights family of LLMs. The Qwen2.5 family consists of several open-weights base and instruction-tuned models ranging from 0.5B to 72B parameters. Additionally, two proprietary Mixture-of-Experts (MoE) models, Qwen2.5-Turbo and Qwen2.5-Plus, are available. The flagship open-weight model, Qwen2.5-72B-Instruct, rivals the performance of Llama-3-405B-Instruct.

Some of the key features of Qwen2.5 and improvements over prior versions:

The Qwen2.5 LLMs maintain a Transformer-based decoder architecture, utilizing Grouped Query Attention (GQA), SwiGLU activation, Rotary Positional Embeddings (RoPE), QKV bias, and RMSNorm. The tokenization employs byte-level byte-pair encoding (BBPE), with an extended set of control tokens.

The Qwen team scaled the pre-training dataset to 18 trillion tokens, incorporating more diverse and high-quality data. Pre-training involved sophisticated data filtering, strategic data mixtures with a focus on knowledge, code, and math, and long-context training.

For post-training, they used intricate supervised fine-tuning (SFT) with over 1 million samples, coupled with multi-stage reinforcement learning (DPO, then GRPO). The two-stage reinforcement learning involved offline learning for complex reasoning and online learning for nuanced output quality.

The models leveraged YARN and Dual Chunk Attention (DCA) for extended context lengths, up to 1 million tokens for Qwen2.5-Turbo.

These advances in training resulted in better human preference alignment, enhanced long text generation, and improved structured data analysis.

Evaluations demonstrate top-tier performance on language understanding, mathematics, coding, and human preference alignment, and the report also highlights Qwen2.5’s long context capabilities. For example, Qwen2.5-Turbo in particular achieved 100% accuracy on a 1M-token passkey retrieval task. Qwen2.5 further served as a foundation for their latest and greatest specialized models: Qwen2.5-Math, Qwen2.5-Coder, QwQ, and multimodal models such as QvQ.

While most proprietary AI model vendors are keeping technical details under wraps, the Qwen team and DeepSeek team have been refreshingly open about their models and their details with their respective Technical Reports. This openness both helps advance AI industry and shows us that open AI models are fast followers of the leading AI models.

The original BERT model (BERT stands for Bidirectional Encoder Representations from Transformers) is an encoder-only transformer model developed to learn language representations from unlabeled text by jointly conditioning on both left and right context in all layers. The BERT model has many uses in information-retrieval and language processing, such as semantic search, classification, and use in RAG applications, and it also paved the way for development of (decoder-only) LLMs.

The authors of Smarter, Better, Faster, Longer: A Modern Bidirectional Encoder for Fast, Memory Efficient, and Long Context Finetuning and Inference decided to update the BERT model, since the architecture has many applications today, with the ModernBERT model.

In this paper, we introduce ModernBERT, bringing modern model optimizations to encoder-only models and representing a major Pareto improvement over older encoders.

ModernBERT is pre-trained on 2 trillion tokens, incorporating a diverse dataset including code, and boasts a native 8192 sequence length. It leverages recent advancements in transformer architecture, including GeGLU activation, rotary positional embeddings (RoPE), and alternating local-global attention mechanisms. It used full-model un-padding to improve efficiency, which avoids wasted computations on padding tokens, combined with Flash Attention for optimized kernel usage.

ModernBERT has two variants, ‘base’ (149M params) and ‘large’ (395M params).

ModernBERT models exhibit state-of-the-art results on a large pool of evaluations encompassing diverse classification tasks and both single and multi-vector retrieval on different domains (including code).

In Natural Language Understanding (NLU) on the GLUE benchmark, ModernBERT-base outperforms previous base models, and ModernBERT-large nearly matches the best large model, while significantly more efficient. On the BEIR benchmark, ModernBERT demonstrates superior performance in both single-vector and multi-vector retrieval.

In addition to strong downstream performance, ModernBERT is also the most speed and memory efficient encoder and is designed for inference on common GPUs.

In summary, ModernBERT is an updated, streamlined and more efficient version of BERT for faster and higher-quality results across a number of information retrieval and natural language processing tasks.

The internal mechanism of the o1 and o3 models and similar reasoning AI models is to train the AI model on a chain-of-thought (CoT) reasoning process that is expressed in tokens. The Meta researchers behind Training Large Language Models to Reason in a Continuous Latent Space make an interesting observation:

However, we argue that language space may not always be optimal for reasoning. For example, most word tokens are primarily for textual coherence and not essential for reasoning, while some critical tokens require complex planning and pose huge challenges to LLMs. To explore the potential of LLM reasoning in an unrestricted latent space instead of using natural language, we introduce a new paradigm Coconut (Chain of Continuous Thought).

The authors observe that the LLMs last hidden state may have more expressive power than output language tokens. This is similar to when you think about a geometry problem by visualizing it rather than talking it out; words sometimes limit thinking.

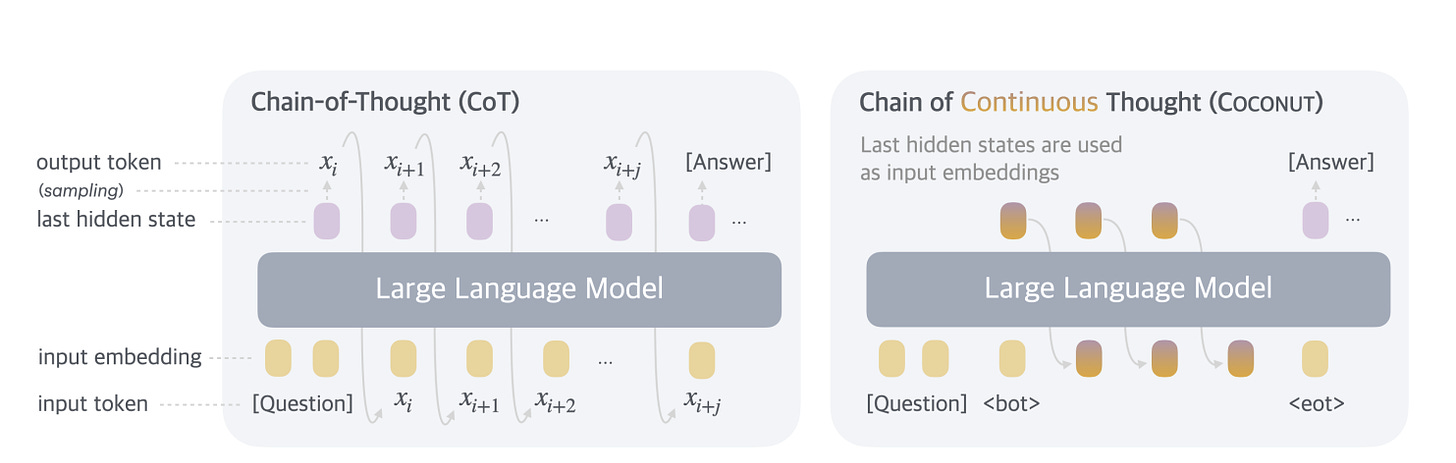

Unlike traditional Chain-of-Thought (CoT) methods where reasoning is expressed through word tokens, Coconut leverages the LLM’s last hidden state as a “continuous thought,” which is then fed directly back into the model as the subsequent input embedding. This departs from mapping to word tokens, enabling the LLM to reason in a more flexible, unrestricted space.

A key advantage of this latent reasoning paradigm is the emergence of advanced reasoning patterns, particularly the ability to encode multiple potential next reasoning steps within the continuous thought:

The continuous thought can encode multiple alternative next reasoning steps, allowing the model to perform a breadth-first search (BFS) to solve the problem, rather than prematurely committing to a single deterministic path like CoT. Coconut outperforms CoT in certain logical reasoning tasks that require substantial backtracking during planning, with fewer thinking tokens during inference.

The use of latent space works better for reasoning because it allows for deferral of some decisions that get ‘locked in’ by choosing specific tokens, which enables a better search.

Experiments demonstrate that Coconut enhances performance in mathematical reasoning (GSM8k) and logical reasoning (ProntoQA, ProsQA), generating significantly fewer tokens during inference while demonstrating a more efficient reasoning process.

The authors employ a multi-stage training strategy inspired by previous work, which utilizes language reasoning chains to guide the learning process. The method effectively alternates between “language mode” and “latent mode” using special tokens to denote the beginning and end of latent thoughts.

The study indicates the potential for scaling latent reasoning for more complex problems. This initial step could lead to important advances for reasoning models: AI reasoning models could contemplate via internal thoughts (using their latent space weights) as a process distinct from expressing chain-of-thought verbally (by outputting specific tokens).